Django Session store and DB Router

The Set

As we reached heavy usage level for the main database due to all its different uses we decided to go down the road to the “micro services” village. While doing so we also spotted that some of the heavy use was due do Django inner workings with sessions. Thankfully there is a way to avoid using the same database for everything in Django. You can easily tell django to use multiple database but migration between single database and multiple database needs extra care.

Django and databases

Django has several backends available for sessions handling. You can chose from in memory, cache (memcached usually), db or cached_db (a mix of cache and db with the cache handling most of the read hits).

In our case we are using the latter : cached_db. By default Django stores all the sessions data into the main database. It’s ok for a small to medium website but as traffic goes up and more sessions are handled that table tends to grow and the hit on the database is not insignificant anymore.

In addition to those different options Django has an interesting layer of abstraction that you can use in between the databases and the code : the db router.

In short : you can specify a database name depending on the model or app name used by a request. It will route requests to the specific database depending on the conditions. The above link covers the bases needed to write one including the functions you need to implement : “db_for_read”, “db_for_write”, “allow_syncdb” and “allow_migrate”.

There are caveats to this described in the Django documentation. In short : you need to be careful about what tables you want to move to a separate DB. Some tables need to be moved together.

Sessions

Session reside in one table and thankfully that table can live happily on its own as long as Django knows how to reach it.

The “definitive way” to do that is through a db_router. Adding a db_router is easy and will direct requests in a heart beat to a separate database. The problem is that it won’t know about sessions currently stored in the main database. In practice it will actually kill any session currently open (and its content will be lost).

In our case we certainly don’t want that to happen. Our customers are very attached to their ongoing sessions and baskets. We are also attached to those sessions too for business reasons.

While a db router will be enough once all data has been moved to the new database we need something temporary to allow a smoother migration.

Proxy

When you care about your data you can do a very easy thing : start to write into both old and new store and try to read in the new store before trying to read in the old store. Of course this is only valid if you have a concept of expiration for the data handled by those stores. Once you have passed expiration time you can consider you have migrated to the new store and start the cleanup.

To do so with Django we decided to add a set of two session backends : one inspired by the cached_db session backend and one inspired by the db session backend.

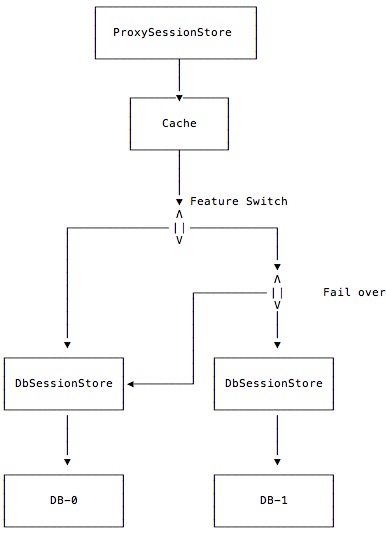

The first one will act in a way very similar to the cached_db one : it will use the cache as primary source of data and they failover to a database store. It differs with the original cached_db session backend as it instantiate two db session backend with specific database index names (“None” or “default” and “new_session_store”). If our feature flag “migration_flag” is ON it will try to use the new database to read the data, then failover to the old database. It will also use both databases to write data. If our feature flag “migration_flag” is OFF it will only use the main database (aka ‘old database’) for both read and write.

The second one is only giving us a way to specify a django database index name for the db to use in the instance of the session db backend.

These are key to the main task of migration to a new store. Without the feature flag the “proxy” will act as the django standard cached_db backend. And if the feature flag is ON the proxy will still act similarly but silently write to both databases and try to read from the new one.

Router

Finally, once the migration of the data is done; we can switch the feature flag “new_db_router_flag” ON which will cause all requests related to the “sessions” application to go to the new database. At that point we can also turn the feature flag “migration_flag” OFF as the db router takes care of all traffic now in an orderly manner.

Preparations

An important step is to create the appropriate table in the new database. Once you have added the new database connection settings to your DATABASES django setting you can do the following :

# first check the list of migrations to run

#> django-admin migrate sessions --database new-db-name --list

# this should only list 1

# run the migration

#> django-admin migrate sessions --database new-db-name

Notice that we specify the name of an app (“sessions”) and the name of the database to run the migration on. This is very important.

Risks

We want to avoid loss of sessions so one thing that must be done is to not turn the “new_db_router_flag” feature flag ON until all data is migrated as it would simply cause all what we don’t want : sessions closing, data loss.

What happened

Thankfully we did not have many surprises when we deployed, started the migration and finished it. We added a step to verify writing through our proxy class was compatible with the standard session storage class. Appart from that it happened quite smoothly.

- We turned the feature flag “migration” ON : writes were happening on both databases, reads were first tried on the new database then the old one.

- Our session ids expire after a couple of weeks so, so we just waited for that time to pass.

- We verified the rows in both databases matched and turned the feature flag “router” ON and the feature flag “migration” OFF. All requests for the sessions table were then sent directly to the new database.

- We cleaned up the proxy code.

We did learn one thing here : our sessions table is not 70GB but, it’s merely 16GB or so. Big difference indeed and we do cleanup regularly So, what’s happening ? We probably need to run a more aggressive vacuum in Postgresql.

Opinion

Coming from a Ruby and RubyOnRails background working with this layer of abstraction (database routing) is very new to me. There are several libraries available to handle sharding or slave databases but nothing internal (at least until Rails 4 that I have briefly used) to do something similar.

Then again the db_router is a “all or nothing” concept. Of course you can tweak things and have a finer control through an elaborated db_router; yet one does wonder.

Still, this concept of db_router is an interesting one and I do think it’s something missing in RoR (if there is one please tell me about it).

Conclusions

The biggest part of this task was to actually figure out how session backends and db_routers work in Django. Designing the two session backends was not complex as it’s mainly adding options and doing double reads and double writes depending on a feature flag.

Knowing about the abilities of Django to actually use multiple databases without the application involvement is quite useful and should be known a bit more across developers. Knowing how to transition data for specific tables into a separate store is very handy. Do read the docs and evaluate your needs and risks; this might be helpful.