Image Background Removal

At Lyst we process a lot of products from a lot of retailers. However, the product information we get from retailers isn’t the same across the industry as:

- Retailers may categorise products differently to competitors,

- Retailers may not provide every bit of information that we need,

- Retailers may have large catalogues and use vague auto-generated descriptions.

Due to this, we have to ensure that each product has all the information that we require (such as category, colour, etc.) and that the information is consistent across the site. For example, what Asos may consider a shoulder bag, Gucci may consider a handbag.

While we have a team of moderators which fill in the missing information, they obviously cannot handle our full incoming item traffic (750 items/s). To address this problem, we use automatic product classifiers to fill in as much information as possible. These are a combination of chained support vector machines and stochastic gradient descent based classifiers. These can currently classify 60% of our products 99% of the time (the sex panther method). Items which we are not confident enough to classify are passed to our human moderators.

As part of our classification ecosystem, we have a number of classifiers which run on images. For example, a classifier which determine the main colour[s] of an image. However, this is not as simple as it seems, as most product photos on Lyst have a background of some type. These backgrounds can be simple colours, gradients, or complex backdrops (i.e. a beach, street, etc.). In order to correctly infer properties about an image of a product, the background must be removed.

Solutions

In order to approach this problem, we want to look at methods which are fast and generalisable. Thus, we want to use image manipulation methods rather than complex image recognition techniques. To begin with, we first considered methods which would work with the simplest of product images. These had simple backgrounds and the product was normally positioned centrally, such as the following image.

One of the first background removal solutions we looked into was global adaptive thresholding. This tries to find a colour value which was between the background colour and the foreground. Any pixel that was within this threshold was used to create an alpha mask. This worked well with images such as that above.

However, this method was bad for images with gradients or complex backgrounds. It also didn’t really like products which were lightly coloured!

In order to fix these issues, we needed to find a solution which would essentially determine where the product was. Now, as we only wanted to use image manipulation methods, we would have to rely on some fairly safe assumptions:

- The product will be in the image

- The product will be prominent in the image

As our images are all sourced from retailer sites, these assumptions are fairly safe.

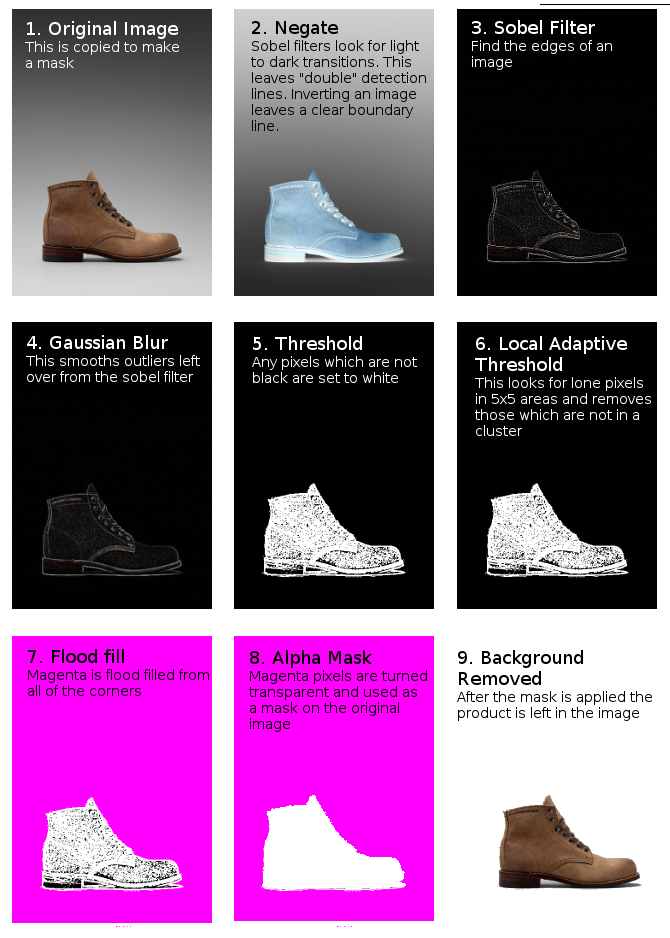

In terms of pixels, this means that the transition from background to product will be harsh. There will be edges which will be detectable. Several methods for performing edge detection exist, but we use the graphicsmagick edge() function (which is a Sobel Filter (graphicsmagick source)) as we use pg-magick for most of our image processing. Another method we looked at was using local adaptive thresholding which worked just as well as the Sobel filter but seemed to add larger borders to the transparency masks, which we wanted to minimise.

In the end, our work flow was fairly succinct and visually looked like:

import pgmagick as pg

def trans_mask_sobel(img):

""" Generate a transparency mask for a given image """

image = pg.Image(img)

# Find object

image.negate()

image.edge()

image.blur(1)

image.threshold(24)

image.adaptiveThreshold(5, 5, 5)

# Fill background

image.fillColor('magenta')

w, h = image.size().width(), image.size().height()

image.floodFillColor('0x0', 'magenta')

image.floodFillColor('0x0+%s+0' % (w-1), 'magenta')

image.floodFillColor('0x0+0+%s' % (h-1), 'magenta')

image.floodFillColor('0x0+%s+%s' % (w-1, h-1), 'magenta')

image.transparent('magenta')

return image

def alpha_composite(image, mask):

""" Composite two images together by overriding one opacity channel """

compos = pg.Image(mask)

compos.composite(

image,

image.size(),

pg.CompositeOperator.CopyOpacityCompositeOp

)

return compos

def remove_background(filename):

""" Remove the background of the image in 'filename' """

img = pg.Image(filename)

transmask = trans_mask_sobel(img)

img = alphacomposite(transmask, img)

img.trim()

img.write('out.png')When running the above code on the failures above, we get some pretty decent (but not perfect) results:

Conclusions

While this technique isn’t yet good enough for front end images, it is a useful tool for pre-processing images that are being classified for colour. It can normally handle images with simple or graduated backgrounds. However, in the cases where it has not fully removed the background it normally has moved more background than product and can still be useful for colour classification.

More Examples